Join on your computer or mobile app

Day trip to Dijon or online

Free registration required

inscriptionslead@u-bourgogne.fr

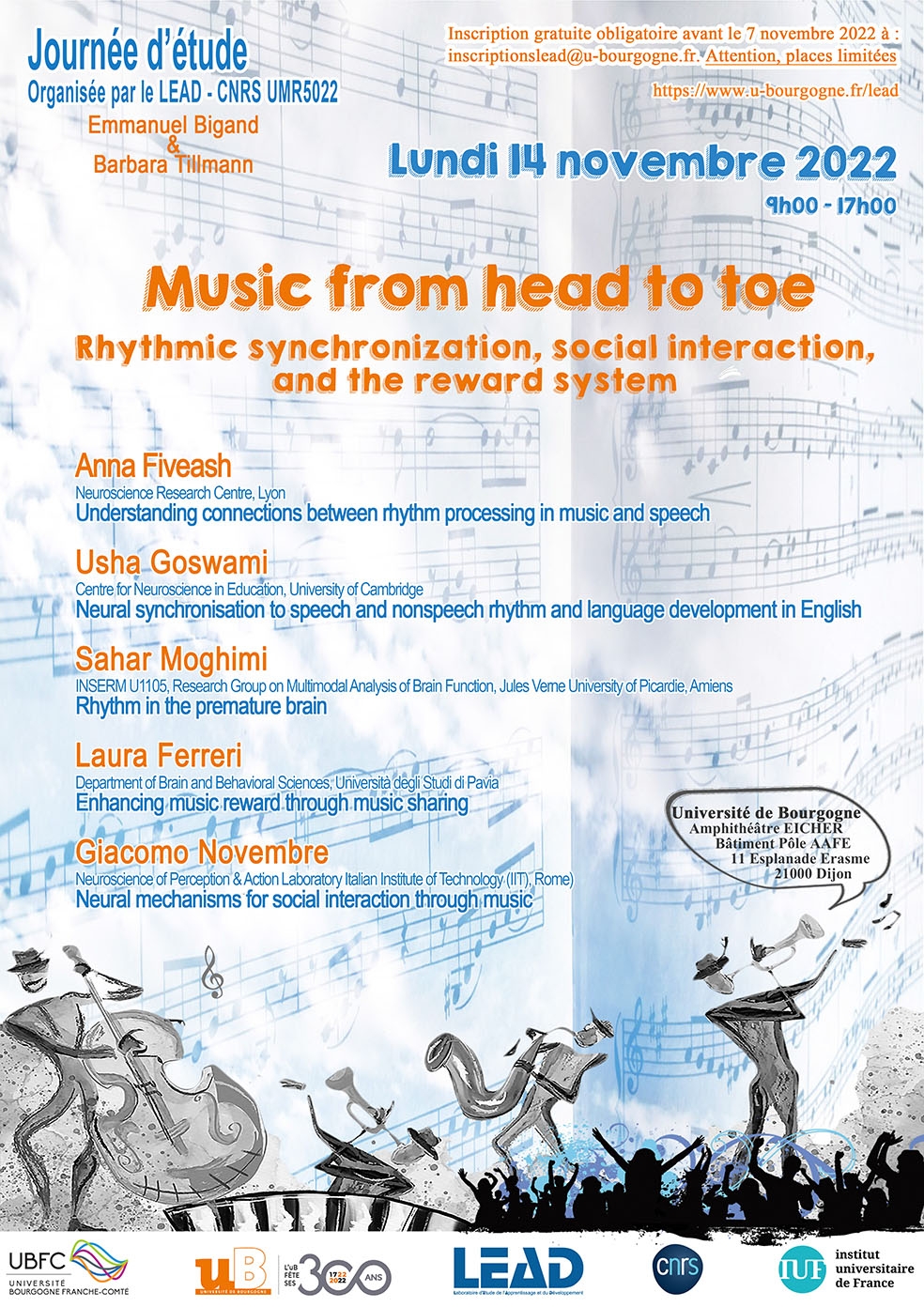

Music from head to toe: Rhythmic synchronization, social interaction, and the reward system.

9h acceuil

9h15 Introduction –E. Bigand & B. Tillmann (LEAD – CNRS UMR5022, Dijon)

9h30h-10h30

Anna Fiveash (Lyon Neuroscience Research Centre) :

Understanding connections between rhythm processing in music and speech

10h30-10h45 pause

10h45-11h45

Usha Goswami (Centre for Neuroscience in Education, University of Cambridge) :

Acoustic Rhythm in Speech and Music: A Neural Approach to Individual Differences in Infants and Children

11h45-12h45

Sahar Moghimi (Inserm UMR1105, Groupe de Recherches sur l’Analyse Multimodale de la Fonction Cérébrale, Université de Picardie Jules Verne, Amiens) :

Rhythm in the premature brain

12h45 – 14h 15 Pause Déjeuner : plateaux repas

14h15 -15h15

Laura Ferreri (Department of Brain and Behavioral Sciences, Università degli Studi di Pavia) :

Enhancing music reward through music sharing

15h15h-16h15

Giacomo Novembre (Neuroscience of Perception & Action Laboratory Italian Institute of Technology (IIT), Rome) :

Neural mechanisms for social interaction through music

16h15 Discussion

17 h Fin

ABSTRACTS

Understanding connections between rhythm processing in music and speech

Anna Fiveash

Lyon Neuroscience Research Centre

Similarities between music and speech/language in terms of their auditory features, rhythmic structure, and hierarchical structure reveal important connections between the two domains. However, the precise mechanisms underlying these connections remain to be elucidated. Based on structural similarities in music and speech, prominent music and speech rhythm theories, and previously reported impaired timing in developmental speech and language disorders, we propose the processing rhythm in speech and music (PRISM) framework. In PRISM, we suggest that precise auditory processing, synchronization/entrainment of neural oscillations to external stimuli, and sensorimotor coupling are three underlying mechanisms supporting music and speech/language processing. The current framework can be used as a basis to investigate rhythm processing in music and speech, as well as timing deficits observed in developmental speech and language disorders. It further outlines potential pathology-specific deficits that can be targeted in music rhythm training to support speech therapy outcomes. In this talk, I will outline the PRISM framework and its links with developmental speech and language disorders, discuss some new data investigating rhythmic priming in children with developmental language disorder, show some preliminary EEG findings investigating rhythm processing, and outline future research directions and implications of the PRISM framework.

***

Acoustic Rhythm in Speech and Music:

A Neural Approach to Individual Differences in Infants and Children

Usha Goswami

Centre for Neuroscience in Education, University of Cambridge.

Recent insights from auditory neuroscience provide a new perspective on how the brain encodes rhythm in both speech and music. Using these recent insights, I have been exploring some key factors underpinning individual differences in children’s development of language and phonology, studying both infants and children with and without dyslexia. Individual differences in phonological processing skills are one of the key cognitive determinants of children’s progress in reading and spelling, are implicated in dyslexia across languages, and appear to originate in rhythm perception deficits. I will describe the neural oscillatory “temporal sampling” theoretical framework that informed our data collection, and discuss how we have been applying Temporal Sampling theory to understanding rhythmic processing in infants and children. Via a selective overview of findings from our infant longitudinal studies (SEEDS and BabyRhythm), as well as two different longitudinal dyslexia projects with children aged 7 – 11 years, I will aim to unpack neural features of rhythmic processing as related to language.

Goswami, U. (2019). Speech rhythm and language acquisition: An amplitude modulation phase hierarchy perspective. Annals of the New York Academy of Sciences, e14137.

Goswami, U. (2022). Language acquisition and speech rhythm patterns: An auditory neuroscience perspective. Royal Society Open Science, 9, 211855

Mandke, K., Flanagan, S., Macfarlane, A., Wilson, A.M., Gabrielczyk, F.C., Gross, J. & Goswami, U. (2022). Neural sampling of the speech signal at different timescales by children with dyslexia. NeuroImage, 253, 119077.

Ní Choisdealbha, A., et al. (2022, in preparation), Neural phase angle from two months when tracking speech and non-speech rhythm linked to language performance from 12 to 24 months. https://psyarxiv.com/vjmf6/

***

Rhythm in the premature brain

Sahar Moghimi

Inserm UMR1105, Groupe de Recherches sur l’Analyse Multimodale de la Fonction Cérébrale, Université de Picardie Jules Verne, Amiens

As early as 25 weeks gestational age (wGA), structural components of the auditory system allow the fetus to hear the omnipresent rhythms of the maternal heartbeat and respiration, as well as environmental footfalls, speech, and songs. The brain is undergoing rapid structural and functional development during the third trimester. During this period, the synaptic connections are refined not only by spontaneous activity, but also by sensory-driven neural activity, preparing the developing neural networks for processing sensory information from the particular environment in which the infant will develop.

The ability to extract rhythmic structure is important for the development of language, music and social communication. Although previous studies show infants’ brains entrain to the periodicities of auditory rhythms and even different metrical interpretations of ambiguous rhythms, capabilities of the premature brain had not been explored previously.

I will present a series of studies conducted in premature neonates (30-34 weeks gestational age) using electroencephalography, demonstrating discriminative auditory abilities of premature neural networks for rhythmic characteristics of sound. This includes entrainment to the hierarchy of rhythmic sequences and detection of rhythmic violations.

Considering the importance of rhythm processing for acquiring language and music, we discuss that even before birth, the premature brain is already learning this important aspect of the auditory world in a sophisticated and abstract way.

***

Enhancing music reward through music sharing

Laura Ferreri

Department of Brain and Behavioral Sciences, Università degli Studi di Pavia

Music represents one of the most pleasurable stimuli throughout our lives. Recent research has shown that musical pleasurable responses rely on the activity of the mesolimbic system, with a main role played by dopaminergic transmission.

Music is also a highly social activity, and the social sharing of an event is known to increase the perceived emotional intensity via the activation of the reward system.

Based on this evidence, we explored the hypothesis that sharing music could enhance music reward. This talk will present data obtained from online and real-life studies showing how sharing music listening can enhance the pleasure derived for music, and the associated benefits on cognition (i.e., memory) and pro-social behavior.

***

Neural mechanisms for social interaction through music

Giacomo Novembre

Neuroscience of Perception & Action Laboratory, Italian Institute of Technology (IIT), Rome

Joint music making is a powerful and widespread social behavior, observable across distinct ages and cultures, and having noteworthy prosocial effects. Yet, the neural mechanisms that permit a group of individuals to jointly make music are poorly understood. In this talk, I will discuss research highlighting the role of two distinct neural mechanisms supporting joint music making. The first neural mechanism, namely action simulation, permits musicians to use their previous experience to represent and simulate the performance of their co-performers. The second neural mechanism, namely inter-neural synchronization, facilitates real-time interpersonal information transfer. While both mechanisms are aimed to facilitate interpersonal coordination, action simulation has a purely endogenous origin while inter-neural synchronization is driven by exogenous cues. As such, action simulation and inter-neural synchrony represent two complementary pillars of joint music making.

***